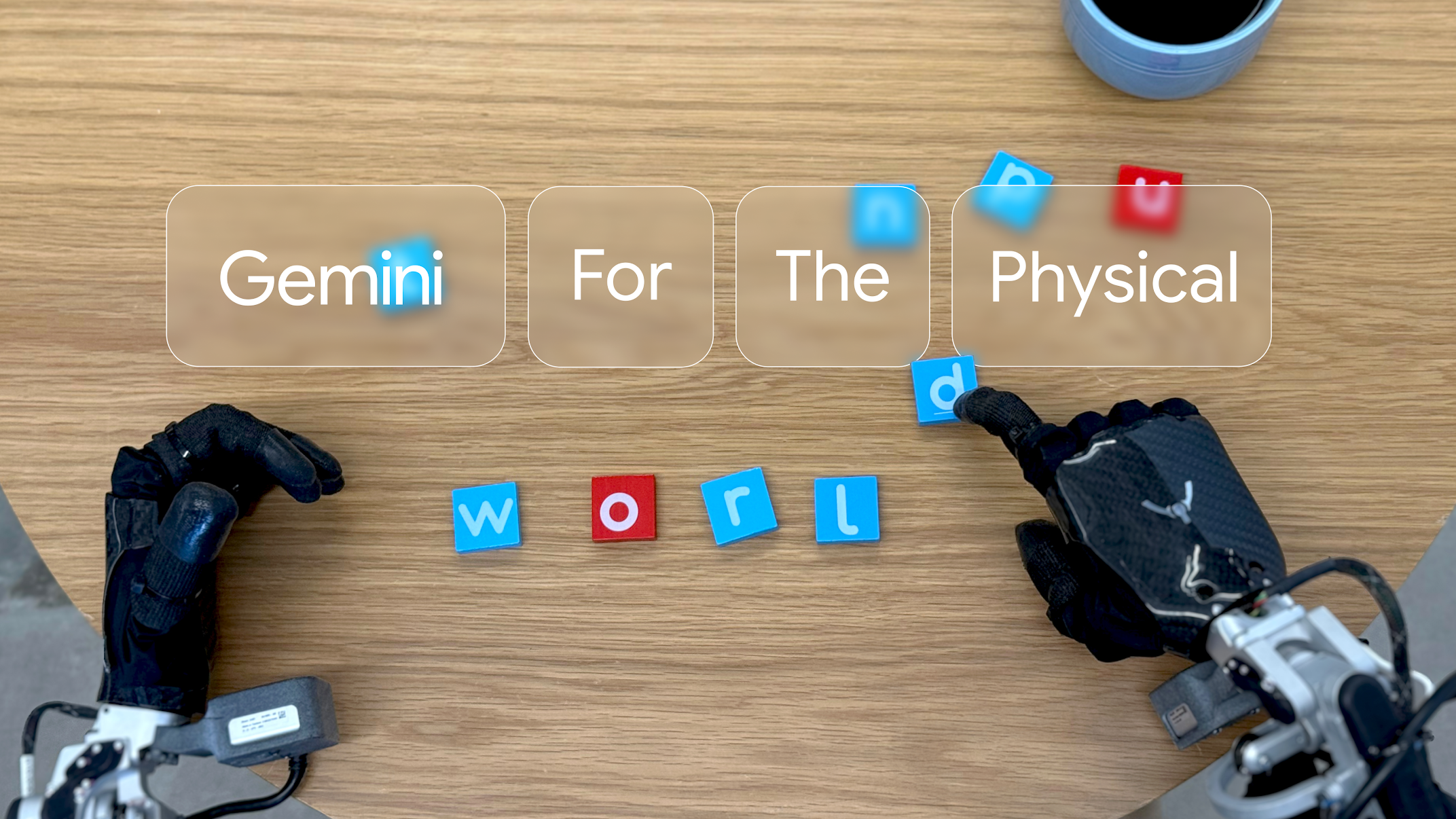

Google DeepMind redefines robotics with Gemini: smarter, safer, and more autonomous machines

Gemini Robotics: merging AI mastery with phisical dexterity

Gemini Robotics: merging AI mastery with phisical dexterity

Google DeepMind has unveiled Gemini Robotics, a groundbreaking AI model built on Gemini 2.0 technology. By integrating multimodal understanding of environments with precise physical actions, the system enables robots to tackle unstructured tasks without task-specific training. Carolina Parada, DeepMind’s robotics lead, explains this fusion allows robots to “interpret their surroundings and act meaningfully,” boosting autonomy in real-world settings like warehouses or homes.

Three breakthroughs: generality, interactivity, and precision

Gemini Robotics addresses three core challenges. First, generality: robots adapt to unseen scenarios using contextual learning. Second, interactivity: they collaborate seamlessly with humans and objects, like handing tools or adjusting grip strength. Third, precision: fine motor skills let them perform delicate tasks folding paper, unscrewing caps, or arranging fragile items with human-like accuracy.

Gemini Robotics-ER: advanced reasoning for complex tasks

The sibling model, Gemini Robotics-ER (Embodied Reasoning), adds spatial and procedural intelligence. It interprets dynamic scenarios for example, packing a lunchbox by locating items, opening containers, and placing foods securely. Parada notes this “physical common sense” lets robots manage multi-step tasks, such as cleaning spills or assembling furniture, by breaking actions into logical sequences.

Safety first: ethical AI and risk-aware systems

Safety is central to DeepMind’s design. Gemini Robotics-ER autonomously evaluates risks before acting, like avoiding collisions or unstable grips. Vikas Sindhwani, a lead researcher, highlights “layered safeguards” inspired by Asimov’s principles, including real-time hazard detection. Google also released safety benchmarks and a “Robot Constitution” to guide ethical behavior, ensuring robots prioritize human well-being as they evolve.

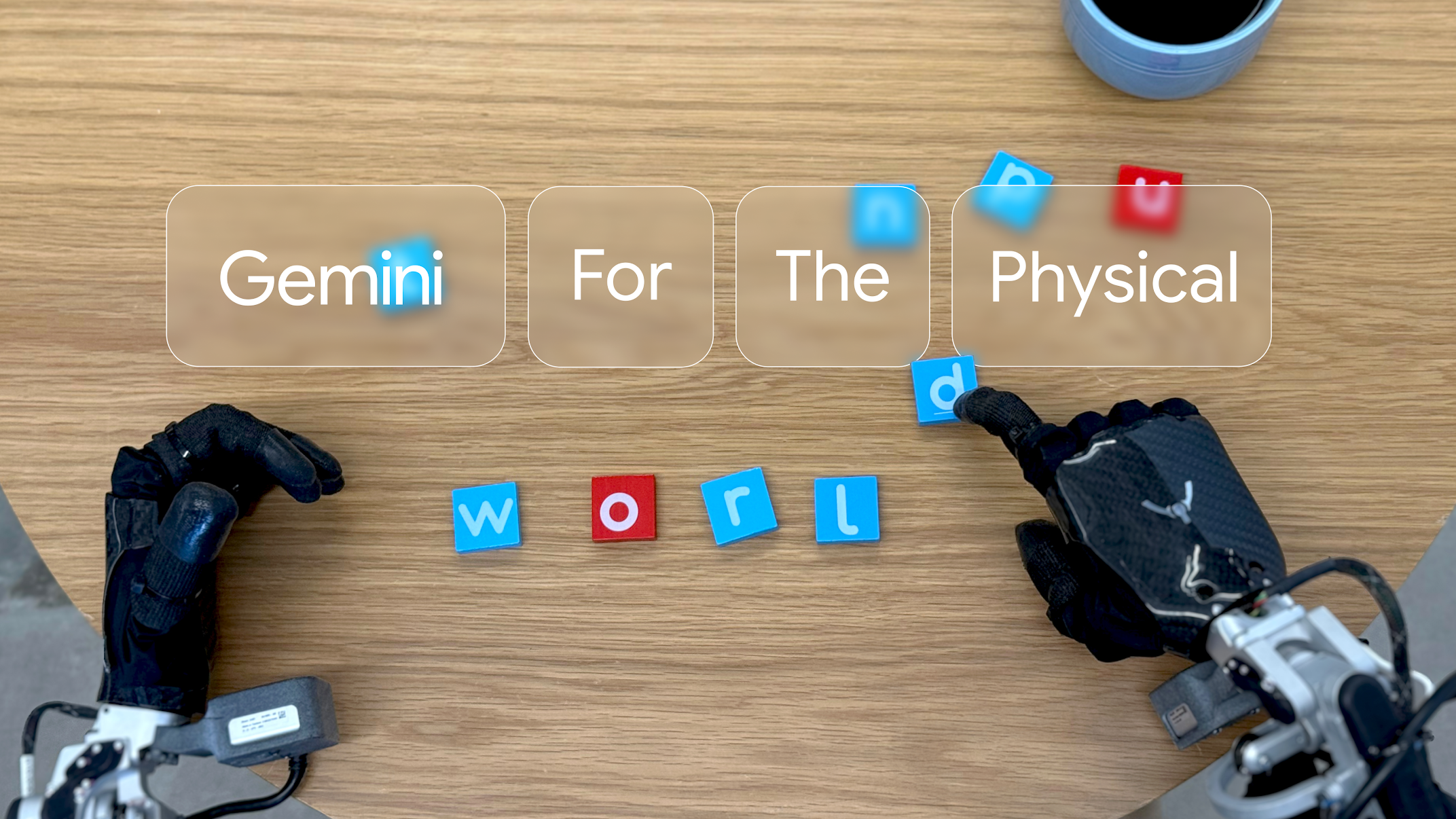

Gemini Robotics: merging AI mastery with phisical dexterity

Gemini Robotics: merging AI mastery with phisical dexterity

Google DeepMind has unveiled Gemini Robotics, a groundbreaking AI model built on Gemini 2.0 technology. By integrating multimodal understanding of environments with precise physical actions, the system enables robots to tackle unstructured tasks without task-specific training. Carolina Parada, DeepMind’s robotics lead, explains this fusion allows robots to “interpret their surroundings and act meaningfully,” boosting autonomy in real-world settings like warehouses or homes.

Three breakthroughs: generality, interactivity, and precision

Gemini Robotics addresses three core challenges. First, generality: robots adapt to unseen scenarios using contextual learning. Second, interactivity: they collaborate seamlessly with humans and objects, like handing tools or adjusting grip strength. Third, precision: fine motor skills let them perform delicate tasks folding paper, unscrewing caps, or arranging fragile items with human-like accuracy.

Gemini Robotics-ER: advanced reasoning for complex tasks

The sibling model, Gemini Robotics-ER (Embodied Reasoning), adds spatial and procedural intelligence. It interprets dynamic scenarios for example, packing a lunchbox by locating items, opening containers, and placing foods securely. Parada notes this “physical common sense” lets robots manage multi-step tasks, such as cleaning spills or assembling furniture, by breaking actions into logical sequences.

Safety first: ethical AI and risk-aware systems

Safety is central to DeepMind’s design. Gemini Robotics-ER autonomously evaluates risks before acting, like avoiding collisions or unstable grips. Vikas Sindhwani, a lead researcher, highlights “layered safeguards” inspired by Asimov’s principles, including real-time hazard detection. Google also released safety benchmarks and a “Robot Constitution” to guide ethical behavior, ensuring robots prioritize human well-being as they evolve.